Download PDF Version at http://www.wekoenig.de/KoenigDissertation2010.pdf

![]() Design and evaluation of

novel input devices and

Design and evaluation of

novel input devices and

interaction techniques for large, high-resolution displays

Dissertation

zur Erlangung des akademischen Grades des

Doktor der Naturwissenschaften (Dr.rer.nat.)

an der Universität Konstanz, Fach Informatik,

Fachbereich Informatik und Informationswissenschaft,

Mathematisch-Naturwissenschaftliche Sektion

vorgelegt von

Werner A. König

Tag der mündlichen Prüfung: 16. September 2010

Referenten:

Prof. Dr. Harald Reiterer

Prof. Dr. Oliver Deussen

Vorsitzender der Prüfungskommission:

Prof. Dr. Marc Scholl

Abstract

Large, high-resolution displays (LHRD) provide the advantageous capability of being able to visualize a large amount of very detailed information, but also introduce new challenges for human-computer interaction. Limited human visual acuity and field of view force users to physically move around in front of these displays either to perceive object details or to obtain an overview. Conventional input devices such as mouse and keyboard, however, restrict users' mobility by requiring a stable surface on which to operate and thus impede fluid interaction and collaborative work settings.

In order to support the investigation of alternative input devices or the design of new ones, we present a design space classification which enables the methodical exploration and evaluation of input devices in general. Based on this theoretical groundwork we introduce the Laser Pointer Interaction, which is especially designed to satisfy the demands of users interacting with LHRDs with respect to mobility, accuracy, interaction speed, and scalability. In contrast to the indirect input mode of the mouse, our interactive laser pointer supports a more natural pointing behaviour based on absolute pointing. We describe the iteratively developed design variants of the hardware input device as well as the software toolkit which enables distributed camera-based tracking of the reflection caused by the infrared laser pointer. In order to assess the general feasibility of the laser pointer interaction for LHRDs, an experiment on the basis of the ISO standard 9241-9 was conducted comparing the interactive laser pointer with the current standard input device, the mouse. The results revealed that the laser pointer‘s performance in terms of selection speed and precision was close to that of the mouse (around 89 % at a distance of 3 m), although the laser pointer was handled freely in mid-air without a stabilizing rest.

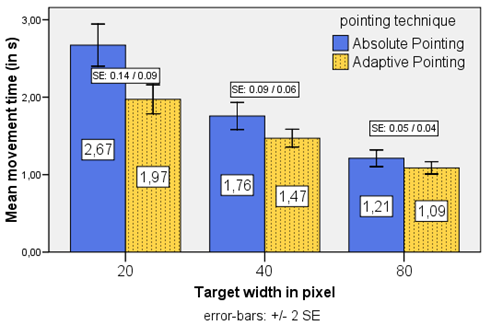

Since natural hand tremor and human motor precision limit further improvement of pointing performance, in particular when interacting from distant positions, we investigate precision enhancing interaction techniques. We introduce Adaptive Pointing, a novel interaction technique which improves pointing performance for absolute input devices by implicitly adapting the Control-Display gain to the current user’s needs without violating users’ mental model of absolute-device operation. In order to evaluate the effect of the Adaptive Pointing technique on interaction performance, we conducted a controlled experiment with 24 participants comparing Adaptive Pointing with pure absolute pointing using the interactive laser pointer. The results showed that Adaptive Pointing results in a significant improvement compared with absolute pointing in terms of movement time (19%), error rate (63%), and user satisfaction.

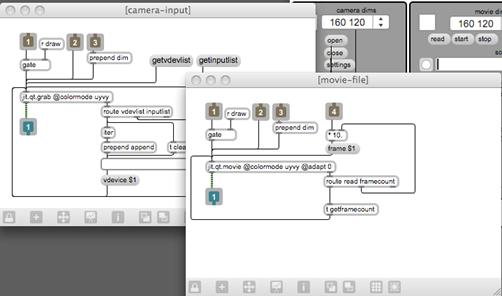

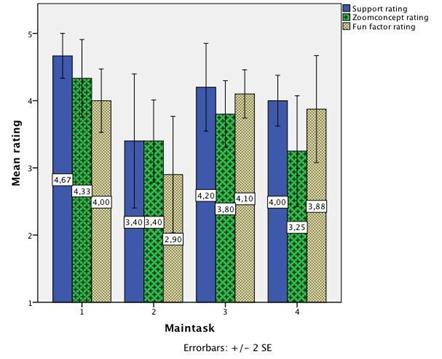

As we experienced in our own research, the development of new input devices and interaction techniques is very challenging, since it is less supported by common development environments and requires very in-depth and broad knowledge of diverse fields such as programming, signal processing, network protocols, hardware prototyping, and electronics. We introduce the Squidy Interaction Library, which eases the design and evaluation of new interaction modalities by unifying relevant frameworks and toolkits in a common library. Squidy provides a central design environment based on high-level visual data flow programming combined with zoomable user interface concepts. The user interface offers a simple visual language and a collection of ready-to-use devices, filters, and interaction techniques which facilitate rapid prototyping and fast iterations. The concept of semantic zooming nevertheless enables access to more advanced functionality on demand. Thus, users are able to adjust the complexity of the user interface to their current needs and knowledge. The Squidy Interaction Library has been iteratively improved alongside the research of this thesis and though its use in various scientific, artistic, and commercial projects. It is free software and is published under the GNU Lesser General Public License.

Zusammenfassung

Große, hochauflösende Bildschirme (LHRD) ermöglichen sehr umfangreiche und komplexe Informationsräume auch mit hohem Detailgrad darzustellen – allerdings geht dieser wesentliche Vorteil gegenüber konventionellen Bildschirmen auch mit neuen Herausforderungen in Bezug auf die Mensch-Computer Interaktion einher. Aufgrund der hohen Bildschirmauflösung in Kombination mit einer Darstellungsfläche von mehreren Quadratmetern übertreffen LHRDs die Fähigkeiten des menschlichen Sehvermögens hinsichtlich der notwendigen Sehschärfe und der physiologisch eingeschränkten Größe des Blickfeldes. Um sowohl das gesamte LHRD zu überblicken als auch die Informationen im Detail wahrnehmen zu können, müssen sich Benutzer vor LHRDs physisch im Raum bewegen. Konventionelle Eingabegeräte wie Maus und Tastatur schränken allerdings, aufgrund der Notwendigkeit einer Auflage zur Interaktion, die Mobilität des Benutzers deutlich ein. Dies verhindert nicht nur eine effiziente und ergonomische Bedienweise von Einzelbenutzern, sondern kann sich auch negativ auf die Produktivität und Kreativität im Rahmen interaktiver Gruppenarbeit auswirken.

Um uns dieser Problemstellung zu nähern und als Basis für die Entwicklung und die Erforschung alternativer Eingabegeräte im Allgemeinen, stellen wir eine Klassifikation des Entwurfsraumes für Eingabegeräte vor. Diese theoretische Grundlage ermöglicht die generelle methodische Entwicklung und Evaluierung von Eingabegeräten sowie deren systematische Beschreibung und Einordnung. Basierend auf dieser Klassifizierung beschreiben und untersuchen wir unsere Laserpointer Interaktion, welche mit dem Ziel entwickelt wurde auch den speziellen Anforderungen für die Bedienung von LHRDs hinsichtlich Mobilität, Genauigkeit, Interaktionsgeschwindigkeit und Skalierbarkeit zu entsprechen. Im Vergleich zu der indirekten Bedienung mit der traditionellen Maus erlaubt die Laserpointer Interaktion eine natürlichere und direktere Interaktionsform mittels absoluter Zeigegestik. Wir beschreiben verschiedene Entwicklungsstufen und Designvarianten der iterativ entwickelten Eingabegeräte sowie des dazugehörigen Software Toolkits. Dieses ermöglicht die kamerabasierte Positionsbestimmung von Reflexionspunkten auf LHRDs, welche durch einen oder mehrere infrarot emittierende Laserpointer verursacht werden. Um der hohen Auflösung von LHRDs Rechnung zu tragen, erlaubt das Software Toolkit die Integration von mehreren Kameras sowie die verteilte Bildanalyse mit mehreren Prozessoren auf einem oder mehreren vernetzten Computern. Die generelle Eignung der Laserpointer Interaktion für LHRDs wurde in Form eines kontrollierten Experiments auf Basis der ISO 9241-9 untersucht. Hierbei wurde unser interaktiver Laserpointer mit dem derzeitigen Standardeingabegerät, der Maus, zur Steuerung der Powerwall der Universität Konstanz verglichen. Obwohl der Laserpointer ohne stabilisierende Auflage frei im Raum bedient wurde, zeigen die Evaluationsergebnisse, dass dessen Performanz hinsichtlich Treffgenauigkeit und Geschwindigkeit nah an den Resultaten der Maus lagen (etwa 89% bei einer Interaktionsdistanz von 3 Metern).

Auf Basis unserer Untersuchungen identifizierten wir das natürliche Zittern der Hand und die limitierte Präzision der Handmotorik als einschränkende Faktoren für die Zeigeperformanz mit absoluten Eingabegeräten, welche vor allem bei größeren Interaktionsdistanzen und hohen Bildschirmauflösungen einen deutlich negativen Einfluss ausüben. Um auch bei diesen für LHRDs typischen Rahmenbedingungen die Zeigeperformanz weiter zu verbessern, erforschten wir unterstützende Interaktionstechniken. Hierbei entwickelten wir Adaptive Pointing, eine neue Interaktionstechnik, welche die Zeigeperformanz für absolute Eingabegeräte erhöht, in dem sie implizit den Control-Display Gain an das aktuelle Benutzerbedürfnis anpasst, ohne dabei die Erwartungen des Nutzers hinsichtlich eines absoluten Zeigeverhaltens zu verletzen. Um den Effekt dieser Interaktionstechnik zu untersuchen, verglichen wir Adaptive Pointing mit einer nicht modifizierten, absoluten Zeigetechnik im Rahmen eines kontrollierten Experiments unter Verwendung des interaktiven Laserpointers. Die Evaluationsergebnisse zeigen eine signifikante Verbesserung durch Adaptive Pointing hinsichtlich der Bewegungszeit (19%), Fehlerrate (63%) und Benutzerzufriedenheit.

Die Entwicklung neuartiger Eingabegeräte und Interaktionstechniken wird Hard- und Software-technisch nur ungenügend von den herkömmlichen Entwicklungsumgebungen unterstützt. Darüber hinaus ist hierfür detailliertes Wissen aus unterschiedlichsten Bereichen notwendig, wie Programmierung, Signalverarbeitung, Netzwerkprotokolle, Prototypenbau und Elektronik. Aus dieser Problemstellung heraus entwickelten wir die Squidy Interaktionsbibliothek, welche die Realisierung und Evaluierung von neuen Interaktionsformen unterstützt und durch die konsistente Vereinigung von zahlreichen heterogenen Software-Toolkits und Frameworks wesentlich vereinfacht. Deren Funktionalitäten sind nicht nur durch eine gemeinsame textuelle Programmierschnittstelle (API) zugänglich, sondern Squidy bietet darüber hinaus auch eine eigene visuelle Entwicklungsumgebung basierend auf visueller Datenflussprogrammierung gepaart mit Konzepten für skalierbare Benutzungsschnittstellen. Hiermit können neue Interaktionsformen auf Basis von vorhandenen Einzelkomponenten und einer einfachen visuellen Sprache iterativ entwickelt und unmittelbar getestet werden. Sind erweiterte Einstellungen oder tiefergehende Anpassungen der Komponenten notwendig, sind diese bei Bedarf und selektiv durch semantisches Zoomen auf diese möglich. Dementsprechend können Benutzer die Granularität der dargestellten Informationen und der angebotenen Funktionalitäten an ihren aktuellen Bedarf und Wissensstand anpassen. Die Squidy Interaktionsbibliothek wurde auf Basis der tagtäglichen Erfahrungen im Rahmen der hier vorgestellten Forschungsarbeiten, als auch durch die Verwendung in vielfältigen wissenschaftlichen, künstlerischen und kommerziellen Projekten iterativ weiterentwickelt und verbessert. Die Squidy Interaktionsbibliothek ist Open Source und unter der GNU Lesser General Public License veröffentlicht.

Parts of this thesis were published in:

· König, W.A., Rädle, R., & Reiterer, H., 2010. Interactive Design of Multimodal User Interfaces – Reducing technical and mental complexity. Journal on Multimodal User Interfaces, 3(3), 197-212.

· König, W.A., Gerken, J., Dierdorf, S., Reiterer, H., 2009. Adaptive Pointing – Design and Evaluation of a Precision Enhancing Technique for Absolute Pointing Devices. Interact 2009: Proceedings of the twelfth IFIP conference on Human-Computer Interaction. Berlin, Germany: Springer LNCS, pp. 658-671.

· König, W.A., Rädle, R., Reiterer, H., 2009. Visual Design of Multimodal Interaction - Bridging the Gap between Interaction Designers and Developers. Workshop on the Challenges of Engineering Multimodal Interaction: Methods, Tools, Evaluation. Sankt Augustin / Bonn, Germany.

· König, W.A., Rädle, R., Reiterer, H., 2009. Squidy: A Zoomable Design Environment for Natural User Interfaces. CHI '09: Proceedings of the 27th international conference extended abstracts on Human factors in computing systems. New York, NY, USA: ACM, pp. 4561-4566.

· König, W.A., Gerken, J., Dierdorf, S., Reiterer, H., 2009. Adaptive Pointing – Implicit Gain Adaptation for Absolute Pointing Devices. CHI '09: Proceedings of the 27th international conference extended abstracts on Human factors in computing systems. New York, NY, USA: ACM, pp. 4171-4176.

· Jetter, H.C., König, W.A., Reiterer, H., 2009. Understanding and Designing Surface Computing with ZOIL and Squidy. CHI 2009 Workshop - Multitouch and Surface Computing. Boston, USA.

· Foehrenbach, S., König, W.A., Gerken, J., Reiterer, H., 2009. Tactile Feedback enhanced Hand Gesture Interaction at Large, High-Resolution Displays. Journal of Visual Languages & Computing, 20(5), 341-351.

· König, W.A., 2008. Visual and Physical Interaction Design for Information Services. Invited Talk at the international conference on Human-Computer Interaction and Information Services. Prague, Czech Republic.

· König, W.A., Böttger, J., Völzow, N., Reiterer, H., 2008. Laserpointer-Interaction between Art and Science. IUI'08: Proceedings of the 13th international conference on Intelligent User Interfaces. New York: ACM Press, pp. 423-424.

· Foehrenbach, S., König, W.A., Gerken, J., Reiterer, H., 2008. Natural Interaction with Hand Gestures and Tactile Feedback for large, high-res Displays. MITH'08: Workshop on Multimodal Interaction Through Haptic Feedback, held in conjunction with AVI'08. Napoli, Italy.

· König, W.A., Bieg, H.-J., Reiterer, H., 2007. Laserpointer-Interaktion für große, hochauflösende Displays. Mensch & Computer 2007: Interaktion im Plural, 7. Konferenz für interaktive und kooperative Medien. Weimar: Oldenbourg Verlag, pp. 69-78.

· König, W.A., Bieg, H.-J., Schmidt, T., Reiterer, H., 2007. Position-independent interaction for large high-resolution displays. IHCI'07: Proceedings of IADIS International Conference on Interfaces and Human Computer Interaction. Lisbon, Portugal: IADIS Press, pp. 117-125.

Contents

1.1 Interacting with large, high-resolution displays

1.2.1 Laser Pointer Interaction

1.2.3 Squidy Interaction Library

2 Large, high-resolution displays

3 Designing Input Devices for LHRDs

3.2 Design Space Classification

3.3.3.1 Technical Requirements and Solutions

3.3.3.3 Interactive Laser Pointer @ Design Space Classification

3.3.4.1 Participants and Design

3.3.5 Globorama: Laser Pointer Interaction between Art and Science

4 Precision Enhancing Pointing Techniques

4.2.2.1 Materials & Participants

5 Interactive Design of Multimodal User Interfaces

5.2 Squidy Interaction Library

5.2.2.3 Interactive Configuration & Evaluation

5.2.2.5 Embedded Code and on-the-fly compilation

5.2.2.6 Dataflow Visualization - Visual Debugging

5.2.3 Formative Evaluation Study

1 INTRODUCTION

![]() Contents

Contents

1.1 Interacting with large, high-resolution displays

1.2.1 Laser Pointer Interaction

1.2.3 Squidy Interaction Library

1.1 Interacting with large, high-resolution displays

Large, high-resolution Displays (LHRD) which typically provide several millions of pixels covering entire walls enable new ways of information visualization, analysis and communication. Thanks to the high display resolution, complex information spaces can be visualized in high detail and within a large context. In contrast to using conventional displays which by their nature result in aggregation or reduction of displayed information, users of LHRDs can overview a much greater amount of data and can identify interesting patterns or relations at a glance. Moreover, additional perspectives on the data can be visualized side by side facilitating multi-dimensional comparison and analytical reasoning. As a result of larger display dimensions in combination with the high resolutions, these advantageous features of LHRDs can be utilized by individual users as well as by multiple users in parallel for co-located collaboration.

A further advantage of LHRDs resulting from their large dimensions is the possibility of visualizing full-size sketches and early CAD[1]-prototypes of cars or other industrial products. Using LHRDs designers and engineers can discuss, modify, and evaluate virtual prototypes in a photorealistic, full scale setting with less need for time-consuming and expensive physical prototypes [Buxton et al. 2000]. Thanks to the realistic setting they provide, even customer studies can be conducted in the very early design phases.

LHRDs differ from conventional displays, however, not only in their technology and in their resulting features, but also in their usage especially in terms of users’ requirements, tasks, and behaviour. LHRDs are far more expensive than standard desktop displays and the utilized hardware and software technology is more complex. At least in the near future, LHRDs will not replace conventional displays but will instead be used in addition to them for specific tasks and situations in which the advantages of LHRDs are especially beneficial. LHRDs are used to a lesser degree for information creation tasks such as writing emails or papers. They are, however, being increasingly used in both industry and science for information presentation, analysis, and modification.

A great challenge and open issue in utilizing LHRDs is the method of interacting with them. In order to accomplish their tasks, users need an input technique suitable for expressing their needs such as controlling the information presentation and manipulating the displayed information.

Compared to conventional displays, LHRDs pose differing and new requirements which have to be considered: Due to the higher resolution of LHRDs, input devices are required which enable users to efficiently navigate hundreds of millions of pixels. At the same time, the input device should also offer the precision to effectively select or manipulate small objects, even if placed several meters away from the user’s position at the far end of the display. Since LHRDs match or even exceed the capabilities of the human visual system in terms of spatial resolution and field of view, physical navigation is required to a large extent in order to perceive all of the pixels and to take full advantage of these displays. Therefore, users move in front of these displays and require input devices that accommodate this necessary mobility. Moreover, such input devices should also function in a collaborative setting with multiple users and multiple identical or different devices in parallel.

We will address these challenges by introducing a novel input device – an interactive laser pointer – that is especially designed to support users in interacting with LHRDs. We will further introduce an appropriate interaction technique – Adaptive Pointing – that enhances pointing precision of the interactive laser pointer and absolute pointing devices in general in order to enable efficient and effective interaction even from distant positions as well as with high pixel densities. With these two key contributions we provide an answer to the following research question: Which input technologies are suitable for supporting users in interacting with large, high-resolution displays?

Our third key contribution does not relate to a specific input technology, but addresses the challenge of designing them. Designing novel input devices and interaction techniques is a very demanding and complex task: practical knowledge of different layers of the system and its design process is required, ranging from hardware prototyping, operating system drivers, communication protocols and signal processing, to application programming interfaces and the final application. There are tools which provide specialized functionalities such as blob detection or feature recognition. The incorporation of such tools, however, in order to enable human-computer interaction (from sensing physical properties to the transition of the system state) often fails due to the monolithic design of the tools or incompatible requirements concerning the hardware setting, operating system, and/or programming language. This complexity increases when designing multimodal interfaces which provide multiple input modalities such as speech, pen, touch or gaze input [Oviatt 2008]. We address these challenges by introducing the Squidy Interaction Library which is designed to provide an answer for the following research question: How can we support the design and evaluation of novel input devices and interaction techniques for large, high-resolution displays?

1.2 Research Objectives

In the following we will give a brief overview of the mentioned key contributions of this thesis which are discussed in detail in the chapters 3, 4, and 5.

1.2.1 Laser Pointer Interaction

Due to the high amount of pixels and the large dimensions of LHRDs, users have to move around in front of these displays to gain either in-depth knowledge or an overview. However, conventional input devices such as a mouse and keyboard restrict users' mobility by requiring a stable surface on which to operate. In order to systematically design and evaluate a new input device addressing this mobility requirement, we identify the design space of input devices in general and describe them in a new design space classification. Based on this theoretical groundwork, we introduce in chapter 3 a flexible pointing device based on an infrared laser pointer that allows identical use from any point and distance. In particular, our interactive laser pointer interaction satisfies the demands of LHRDs in the areas of mobility, accuracy, interaction speed, and scalability. The solution presented is technically designed as a generic interaction toolkit whose flexibility and general suitability was verified by using it with two very different systems – a planar 221″ Powerwall and a curved 360° panoramic display. Furthermore, a comparative evaluation study with 16 participants was conducted on the Powerwall in order to compare the performances of a conventional mouse and our interactive laser pointer by means of a unidirectional tapping test at varying distances (ISO 9241-9). The results revealed that the laser pointer‘s performance in terms of selection speed and precision was close to that of the mouse (around 89 % at a distance of 3 m) although the laser pointer was handled freely in mid-air without a stabilizing rest. However, the experiment results also suggest that, due to trembling of the user’s hand, the laser pointer’s performance deteriorates significantly with increasing distance. Since the natural hand tremor and human motor precision limit further improvement of pointing performance in particular when interacting from distant positions, we investigate precision enhancing interaction techniques and introduce a further contribution of this thesis – Adaptive Pointing.

1.2.2 Adaptive Pointing

In chapter 4, we present Adaptive Pointing, a novel approach to addressing the common problem of accuracy when using absolute pointing devices for distant interaction. First, we discuss related work concerning the problem-domain of pointing accuracy when using absolute or relative pointing devices. This motivates our introduction of a novel classification scheme for more clearly discriminating between different approaches. Second, the Adaptive Pointing technique is presented and described in detail. The intention behind this approach is to improve pointing performance for absolute input devices by implicitly adapting the Control-Display gain to the current user’s needs without violating users’ mental model of absolute-device operation. Third, we present an experiment comparing Adaptive Pointing with pure absolute pointing using a laser pointer as an example of an absolute device. The results show that Adaptive Pointing results in a significant improvement compared with absolute pointing in terms of movement time (19%), error rate (63%), and user satisfaction.

1.2.3 Squidy Interaction Library

In contrast to the pioneers of multimodal interaction, e.g., Richard Bolt in the late seventies, today's researchers in this domain can benefit from various existing hardware devices and software toolkits. Although these development tools are available, employing them is still challenging, particularly in terms of their usability and their appropriateness to the actual design and research process. In chapter 5, we present a three-part approach to supporting interaction designers and researchers in designing, developing, and evaluating novel input devices and interaction modalities. First, we present a software architecture that enables the unification of a great variety of very heterogeneous device drivers and special-purpose toolkits in a common interaction library named "Squidy". Second, we introduce a visual design environment that minimizes the threshold for its usage (ease-of-use) but scales well with increasing complexity (ceiling) by combining the concepts of semantic zooming with visual dataflow programming. Third, we not only support the interactive design and rapid prototyping of multimodal interfaces but also provide advanced development and debugging techniques to improve technical and conceptual solutions. In addition, we offer a test platform for controlled comparative evaluation studies as well as standard logging and analysis techniques for informing the subsequent design iteration. Squidy therefore supports the entire development lifecycle of multimodal interaction design, in both industry and research.

1.3 Thesis Outline

Having motivated the research objectives of this thesis in the introduction, the second chapter discusses the unique characteristics of LHRDs and gives an overview of empirical findings concerning the benefits of the increased resolution and display size as compared to conventional desktop displays. Furthermore, the limitations of the human visual system are discussed and the need for physical navigation is motivated.

Chapter 3 presents a new classification scheme for the design space of input devices in general which is the theoretical groundwork for our laser pointer interaction introduced thereafter. The hardware and software design of the interactive laser pointer is discussed and the empirical study which was conducted to assess the usability of the laser pointer is described. The chapter concludes by illustrating the Globorama installation, a real-world use case of the laser pointer.

With the aim of further enhancing the pointing performance of the interactive laser pointer (and absolute pointing devices in general), natural hand tremor and human motor precision are identified as limiting factors in chapter 4. Based on these insights and on a review of related techniques, the Adaptive Pointing technique is introduced and its mathematical descriptions are given. In order to examine the benefits of Adaptive Pointing compared to pure absolute pointing, a controlled experiment was conducted which is described at the last part of chapter 4.

An overview of existing development environments for designing novel input devices and in particular for designing multimodal interfaces is given at the beginning of chapter 5. Thereafter, the Squidy Interaction Library is introduced. The framework architecture as well as the user interface design of the visual design environment is explained. For the benefit of further design iterations a formative study was conducted which is described subsequently. The chapter concludes by presenting case studies in which the Squidy Interaction Library has already been utilized.

Chapter 6 sums up the main results of the research described in this thesis.

2 Large, high-resolution displays

![]() Contents

Contents

Large, high-resolution displays (LHRD) differ from conventional desktop monitors in various aspects. Technologically speaking, they provide larger physical dimensions as well as much higher pixel resolutions. From the user’s perspective, however, this leads to an increased need for physical navigation. When designing specific input devices for LHRDs, these particular characteristics of LHRDs as well as their consequences for human-computer interaction have to be considered. In this chapter, we will discuss these in more detail and provide an overview of the benefits of increased resolution and display size. We will furthermore discuss how the limitations of the human visual system, in terms of visual acuity and field of view, influence users’ physical navigation. This chapter motivates our research in concerning LHRDs presented in the following chapters and introduces the general theoretical and empirical background.

2.1 Characteristics of LHRDs

Large, high-resolution displays vary greatly in the technology they use and in their geometry. However, they have in common, that their resolution and physical size are much larger than conventional desktop displays. For the sake of clarity we have categorized today’s displays into the four main classes illustrated in Figure 1. The class of LHRDs contains displays which are large enough to enable multiple users to work in parallel – in contrast to desktop monitors that are designed for single-users. In addition, the LHRDs provide an amount of pixels large enough for visualizing very complex information spaces, roughly starting with three to five million pixels. Thanks to recent advances in display technology, LHRDs can be build that even offer hundreds of millions of pixels. Thus, the class of LHRDs covers a wide range of resolutions and heterogeneous display technologies. In the following sections, we will discuss two factors – display resolution and physical size – while focusing on their impact on human-computer interaction and their implications for the design of suitable input devices.

Figure 1: Display classification schema – four categories and corresponding examples distinguished by the dimensions display resolution and physical size.

2.1.1 Display resolution

LHRDs offer resolutions that are multiple times higher than those of current standard high-definition displays (1920 x 1080 pixels). With such an increased amount of pixels, very complex and large information spaces can be visualized at a glance. This is a key advantage of LHRDs for many research and application fields such as Information Visualization or Visual Analytics; a vast amount of data can be represented in great detail without sacrificing context or content. Here, human judgement is needed for finding relevant patterns or interesting values [Thomas & Cook 2005]. LHRDs provide the possibility of visualizing large information sets in great detail as well as allowing for additional perspectives on the data, accentuating specific features such as time dependencies or spatial relations (e.g., based on the concept of multiple coordinated views [North & Shneiderman 2000]). Thus, the user is able to obtain an overview of the data and to find and compare interesting items simultaneously. Thanks to the increased resolution as compared to conventional displays, LHRDs facilitate human insight, discovery and analytical reasoning in general. Using conventional displays with lower resolutions, even medium sized information spaces have to be reduced and/or clipped in order to fit on these displays. These reductions in information quantity could potentially hide interesting information by clustering or aggregation. LHRDs are also limited in their amount of pixels, but provide very high resolutions that even surpass human visual capabilities (see section 2.3, p. 31). Users are less restricted by the LHRDs and therefore are able to fully utilise their perceptual abilities.

Figure 2: The „Visible Human Female“ data set is rendered in high-resolution on the GRIS Display Wall in Tübingen with a resolution of 10240 x 6400 pixels and a size of 2.8 x 2 meters. The wall consists of 4x4 30-inch LCDs and is powered by a cluster of 16 high-performance computers [Parys & Knittel 2009].

From a technical perspective, there are two major approaches for achieving high display resolutions such as those offered by LHRDs: tiled LCD[2] panels and projection-based seamless displays [Ni, Schmidt, et al. 2006]. A tiled LCD panel (see Figure 2) consists of an array of multiple LCD displays that are arranged as flat or curved display walls. The arrangement of the individual LCDs is very flexible, but introduces borders between each tile breaking up the continuity of the LHRD. The display bezels segment the LHRD which then causes a visual distortion of the displayed image and an interaction distortion when the cursor crosses the bezels [Robertson et al. 2005]. Projection-based seamless displays avoid these distortions by combining multiple projectors which are arranged in an array in order to generate a single and homogeneous large, high-resolution image (see Figure 3). These displays can have almost any shape and size, but the configuration of the projection geometry and the calibration of the individual colour and brightness levels are rather complex. In comparison to the LCD solution, these projectors are more expensive and require more space due to the optical projection distance, but they provide the great advantage of a seamless image and the potential for stereoscopic imaging.

Figure 3: PixelMap Visualization of the US census data is displayed on the Powerwall of the University of Konstanz[3]. The Powerwall offers a resolution of 4640 x 1920 pixels and a size of 5.2 x 2.15 meters. The rear-projection display is driven by 8 projectors that render a very homogeneous image thanks to soft-edge blending and image synchronization.

The interactive rendering of high-resolution images with millions of pixels is a very performance- and memory-consuming task that exceeds the capabilities of standard graphics cards. Specialized high-end multi-GPU visual computing systems such as the NVIDIA Quadro Plex[4] offer the possibility of rendering images with a total resolution of almost 36 megapixels[5]. However, the actual interactivity of the system – measured in frames per second – depends highly on the rendering engine of the application (e.g. OpenGL, Direct3D, or GDI) and the type of data (e.g. pixel, voxel, or vector graphics). These visual computing systems can be connected to a conventional computer and act – from users’ perspective – like a standard graphics card: there is no need for software modifications or special restrictions when rendering applications on a LHRD with these solutions. However if higher resolutions or faster frame rates are required more scalable solutions are needed. An alternative and more modular approach is based on the combination of multiple high-performance graphics computers for cluster-based rendering. Here, the rendering process is distributed over a set of computers that are connected via a high-throughput network [Staadt et al. 2003]. In the last years several software toolkits have been developed which manage the distribution of the rendering operations and their synchronization over the network. However, these toolkits are limited to specific rendering engines (e.g. the Chromium toolkit [Humphreys et al. 2002] extends the OpenGL engine) and require code modifications of the applications to be distributed (e.g. OpenSG [Voß et al. 2002], VRJuggler [Bierbaum et al. 2001], and Equalizer [Eilemann et al. 2009]). Thus, the usability of LHRDs driven by such distributed rendering solutions is limited, since proprietary software such as Microsoft Powerpoint cannot be modified to function with these toolkits.

2.1.2 Physical display size

The second differentiating characteristic of LHRDs is their physical size, more precisely, the physical dimension of the displayed viewport. The dimensions of LHRDs are typically much larger than conventional desktop displays. The Powerwall at the University of Konstanz, for example, has a display diagonal of approximately 221 inches (see Figure 3), which is about 10 times larger than a current standard desktop display. With physical dimensions of 5.2 x 2.15 meters the Powerwall covers an entire wall and enables – along with its high resolution – multiple users to work together or in parallel independently. Large, low-resolution projections are widely-used for presentation environments, but provide too few pixels to accommodate multiple user settings, e.g., individual display space and focus. In contrast, LHRDs are suitable for use as shared displays enabling multiple users to work in parallel while adhering to social protocols of personal space and without the need of formal turn-taking [Tuddenham & Robinson 2006]. They support group communication as electronic whiteboards or peripheral displays e.g., for brainstorming sessions [Guimbretière et al. 2001]. They facilitate social and situational awareness that are crucial for co-located team collaboration [Kulyk et al. 2008], [Greenberg & Rounding 2001]. Furthermore, LHRDs can be used to provide lightweight information about group activity [Huang & Mynatt 2003] and thus support shared activities and knowledge.

A further advantage of LHRDs resulting from their large dimensions is the possibility of visualizing full-size sketches and early CAD[6]-prototypes of cars or other industrial products. Using LHRDs designers and engineers can discuss, modify and evaluate virtual prototypes in a photorealistic, full scale setting with less need of time-consuming and expensive physical prototypes [Buxton et al. 2000]. Thanks to the realistic setting they provide, even customer studies can be conducted in the very early design phases.

“Getting the styling of the car correct early in the design process is extremely important. In this, the scale at which the designs are rendered play a surprisingly critical role. For example, the primary curves that define a car’s style may look perfectly fine at quarter scale but elicit a completely different emotional response at full scale.” [Buxton et al. 2000].

Thus, LHRDs can improve and shorten the development lifecycle in engineering and design by visually communicating ideas in better quality and realistic size and by facilitating group discussion and customer involvement.

Another advantage stemming from LHRDs’ large dimensions is the potential for physically exploring them. On conventional displays the user navigates an information space in a purely virtual manner by changing the current viewport via mouse or keyboard input, resulting in an accordant replacement of the displayed information on the screen (e.g., panning on a geographical map). Thanks to the physical dimensions of LHRDs the user has – in addition or as an alternative to virtual navigation – the ability to physically navigate the information space by bodily movement in front of the screen [Ball & North 2005]. This means that instead of changing the displayed information with virtual navigation, on LHRDs the information can remain but the user changes her position and orientation for navigation (eye-, head-, or body-movement). For example, the user steps forward to see details or steps back to obtain an overview (see section 2.3.1, p. 31 for details about human visual acuity).

Physical navigation offers a very direct and natural mode of interaction since it builds upon our elementary navigation strategies from the real world and by this no additional cognitive load is introduced (e.g., handling of the input device, manual control of viewport, and reorientation in the changed information view). Although physical navigation is more direct and natural and needs less cognitive load, it is questionable as to whether or not its usage is as efficient as virtual navigation. If a significant change of the viewport is desired and an eye- or head-movement is not sufficient, the user has to move her body to the required location – instead of moving a (small) input device for virtual navigation. In the last years several empirical experiments were conducted that address the question of efficiency and the general benefits of LHRDs resulting from the higher resolution and the larger physical dimensions.

2.2 Evaluation studies

Shupp et al. [2006] investigated user performance time, accuracy, and mental workload on geospatial search, route tracing and comparison tasks with different display resolutions and physical sizes. They compared a single monitor configuration (1280 x 1024 pixels) to a tiled LHRD with 12 monitors (4 x 3 display array with 5120 x 3072 pixels) and a tiled LHRD with 24 monitors (8 x 3 display array with 10240 x 3072 pixels). They found that user frustration was significantly less with larger displays. The participants tended to use more physical navigation (although this was limited by the provided input devices) and less virtual navigation in the larger display conditions and this change also correlated to improved user performance. They concluded that the participants might have benefited from the naturalness of physical navigation and the reduction of potential frustrations of virtual navigation [Shupp et al. 2006].

Figure 4: Tiled LCD panel with 24 monitors as flat (left) and as curved (right) wall display [Shupp et al. 2006].

In the same experiment, Shupp et al. [2006] also investigated the effect of the curvature of the display as an additional independent variable. They compared the flat configuration of the tiled LCD to a horizontally curved variant (see Figure 4). The experiment results showed that curving the display around the user decreased task time regardless of the viewport size (12 versus 24 monitors). Of all display conditions, user performance was the best on the curved twenty-four monitor condition [Shupp et al. 2006]. In the flat condition, the user had to cover a large distance to get from the left to the right border of the display. The curved configuration had the advantages that all pixels of the display had the same distance to the centre point and that this distance was defined according to the human visual acuity (see section 2.3.1, p. 31). Thus, all pixels were resolvable solely with head and eye movements and less physical walking was required. This optimality in display curvature, size and resolution also implies a great disadvantage: The curved design does not scale well with increasing display resolution and size, since human visual acuity limits the maximum viewing distance (radius of the circular arc) depending on the pixel density (resolution/size). Furthermore, the restricted space inside the curved display also limits the number of users and thus constrains multi-user interaction as well as co-located collaboration.

In a follow-up study, Ball et al. [2007] examined in particular the relationships between display size (viewport), amount of physical and virtual navigation, and user task performance in the context of LHRDs. The experimental setting of the above mentioned experiment of Shupp et al. restrained users in their physical movement while interacting with the system since the provided input devices mouse and keyboard required a stable surface (e.g. a table) for their proper operation (see Figure 4). Ball et al. also used the flat 8 x 3 tiled LCD panel, but provided participants with a wireless Gyration mouse that offers the flexibility to interact in mid-air (see Figure 5 left & right). Hence, the participants had the full mobility while interacting with the LHRD. In order to measure the extent of participants’ physical navigation, they wore a hat equipped with retro-reflective markers which were tracked by an infra-red tracking system (see Figure 5 centre).

Figure 5: Participant using the wireless Gyration mouse with the 8x3 tiled LCD panel (left); the hat used to track participants’ position (centre) [Ball et al. 2007]. Wireless Gyration[7] mouse (right).

The 32 participants performed four different tasks on a geographic map of the Houston area with embedded geo-referenced data about 3,500 houses for sale: navigation to a target, search for a target, pattern finding for a group of targets, and open-ended insight for a group of targets. For the navigation tasks, the participants were asked to navigate to a given house on the map and to read aloud the corresponding attributes of the house. The house was already shown on the screen, but in order to see the textual attributes navigation (zooming) might have been required (dependent on the display condition). For the search task, participants had to find houses that had particular attributes (e.g. a price between $100,000 and $110,000). In the pattern finding tasks, participants were asked to identify specific clusters or correlations of the displayed houses or their attributes. The insight tasks were designed as open-ended tasks in which participants wrote down insights gained in the experience. No performance time was measured in the insight task and participants were given a rolling lecture stand on which to write. This task is sparsely described in the paper and the physical constraints limit its value for this discussion. The first three tasks, however, required a range of levels of detail, and hence participants had to navigate (zooming) physically and/or virtually.

The participants performed the navigation and search tasks (within subject design) on all eight viewport width conditions (from 1 to 8 display rows each time with 3 displays in the vertical direction). For all display conditions, the task began with a best fitting overview of the same area of Houston. Although the displayed map sections were slightly different due to the different aspect ratios of the viewport conditions, the participants received the same starting point and a similar overview. However, the larger display conditions showed more details at once thanks to the increased amount of screen pixels available (higher resolution).

The results showed a significant effect of viewport width on performance times for the navigation and search tasks. For example, for the navigation task, the performance time was reduced by a factor of 3.5, from 18.6 seconds on the one column display setup to 5.2 seconds on the eight column display setup [Ball et al. 2007]. In the search task, performance was reduced by a factor of 2. In summary, the results showed that larger viewport sizes lead to a significant and considerable improvement of task performance, at least for navigation and search tasks. There was only a near-significant trend for the pattern finding task.

Analogous with performance time, the amount of virtual navigation (zooming and panning via wireless mouse) also decreased with increasing viewport size. Ball et al. [2007] found that the number of virtual zooms and pans correlated with performance, while physical distance travelled did not. Physical navigation seems to be more efficient, since the larger viewports also lead, in general, to more physical navigation, but decreased performance time. The performance advantage of the usage of LHRDs is not only caused by less virtual and more efficient physical navigation; the participants also changed their navigation strategies and heuristics with increasing display size. As the participants were given the ability to see more of the overview and details at once, they were generally observed to make more intelligent navigation decisions [Ball et al. 2007].

A further interesting finding of Ball et al. [2007] concerns the subjective preference of the participants. When possible, participants preferred to physically navigate. For several task conditions, all 32 participants chose to use only physical navigation to complete their task. Ball et al. [2007] observed that participants first physically navigated as much as possible before virtually navigating. This subjective preference is also underlined by an initial study of Ball & North [2005] in which they reported that the participants felt less frustration with larger viewports and had a greater sense of confidence concerning their responses. These results are also in line with the findings of Shupp et al. [2006] discussed before.

All of the thus far discussed experiments used the parameters display resolution and display size as a combined variable and manipulated both together by switching on more display tiles on a tiled LCD panel. Although both resolution and display size are the main parameters separating conventional displays from LHRDs, they can have different effects on the experimental results or on the benefit of a particular display in general. From these experiments, it is not clear, if higher resolution or larger physical size leads to the better user performance, and if consequently one of them would be sufficient for producing similar results.

Ni et al. [2006] separated the parameters display size and resolution by comparing four different display conditions, consisting of two different display sizes (21.6 versus 55 inches in diagonal) and two different resolutions (1280 x 720 versus 2560 x 1440 pixels, see Figure 6). Another difference between this and the previously discussed studies is the task domain. The 32 participants were asked to navigate in an Information-Rich Virtual Environment (IRVE), a three-dimensional multi-room art museum, in contrast to the previous studies in which participants navigated in a two-dimensional geographic map. However, all experiments had in common that a two-dimensional projection technique was used (instead of stereoscopic imaging).

Figure 6: Display variants to evaluate individual and combined effects of display size and resolution on task performance: IBM T221 LCD as small display with high and alternatively low resolution (left). Rear-projection screen as large, low-resolution display (centre). VisBlocks: projection-based large, high-resolution display (right) [Ni et al. 2006].

The participants were asked to navigate through the virtual museum while searching for and comparing different paintings and their metadata (e.g., artist or price). The results revealed significant main effects of both size and resolution. The display size seemed to play a dominant role, since the participants performed with both large display setups better than with the small setups (independent of their resolution). Ni et al. [2006] also reported that the participants felt more present when experiencing large displays.

Figure 7: Average task performances for the two resolution (low/high) x two display size (small/high) conditions. Results show main effects of display size and resolution. Error bars illustrate standard errors [Ni et al. 2006].

Overall, the large, high-resolution display outperformed the other three conditions. In this setup, participants could make use of peripheral vision for navigation and orientation and they were also able to see more labels at once. This effect is remarkable since the resolution of the large, high-resolution display (3.7 megapixels) in this experiment was not very high compared to the LHRDs used in the previously discussed experiments (31.5 megapixels in the 8 x 3 condition of Shupp et al. [2006] and Ball et al. [2007]).

In summary, the experiments showed that LHRDs are beneficial for users at least when navigating in two- and three-dimensional information spaces and searching for as well as comparing information objects. The higher resolution and larger display sizes of LHRDs compared to conventional desktop displays enable better task performances, but also offer subjective advantages. The participants were less frustrated and benefited from the naturalness of physical navigation [Shupp et al. 2006]. They had more of a sense of confidence about their responses [Ball & North 2005] and felt more present in the larger and thus more immersive display conditions [Ni, Bowman, et al. 2006]. Moreover, the participants were generally observed to make more intelligent navigation decisions when using the LHRDs [Ball et al. 2007]. In general, the increased display size and resolution lead to more physical navigation, although the LHRDs are able to visualize all relevant information at once. The reason underlying the increased amount of physical navigation is quite obvious when considering the human visual capabilities.

2.3 Human visual capabilities

With recent advances in technology, LHRDs providing hundreds of millions of pixels covering entire walls can be constructed. One of the LHRDs with the highest resolution worldwide (see Multi-Megapixel Display List[8] for the ranking) is the Stallion cluster of the Texas Advanced Computing Center that provides 307 megapixels based on a flat 15x5 tiled LCD panel (see Figure 8). Here, the pixel pitch, the size of a single pixel, is just 0.25mm, but the entire display has a width of over 10 meters. Thus, the user has to come close to the display to perceive the detailed information represented by a few pixels or to step back to overview the entire LHRD from a distant position. This physical navigation of the user is caused by the limitation of the human visual system in terms of visual acuity and field of view.

Figure 8: Stallion Cluster at the University of Texas: Ultra high-resolution display with 15x5 30 inch Dell LCD monitors and an overall resolution of 307 megapixels[9].

2.3.1 Visual acuity

The ability of a person to visually resolve a spatial pattern is referred to as visual acuity. This term was coined by Franciscus Donders (1818-1889) to describe the sharpness of vision. Herman Snellen (1834-1908) later defined normal visual acuity as the ability to resolve a spatial pattern separated by a visual angle of one minute of arc. Thus, if a user is to be able to perceive an individual pixel (0.25 mm) on the Stallion Cluster, for example, she has to be closer than 85.9 cm to the display wall (see Equation 2). With larger viewing distances the individual pixels merge with their neighbours and the information represented on the pixel-level disappears. Since the Stallion Cluster has a display width of more than 10 meters, it is clear, that there is no single position from which a person will be able to perceive all 307 megapixels. Thus, the user perceives and explores the information displayed on a LHRD as she is accustomed to explore information in the real world (e.g., searching for a book in a large book shelf) by physically moving in front of the LHRD.

Figure 9: Visual angle α of an object measured from the optical centre of the eye (adapted from [Ware 2004]).

![]()

Equation 1: Calculation of the visual angle α with viewing distance d and object size h.

![]()

Equation 2: Calculation of the maximal viewing distance to perceive and distinguish between individual pixels (visual angle α = 1/60°) with normal visual acuity (also noted as 20/20 vision).

However, for certain visualization techniques and information spaces, it is critical to be able to see each individual pixel, such as the PixelMaps technique used to visualize census data in Figure 3. In order to read a displayed text it is also essential to be able to distinguish the different characters. The Latin alphabet has several characters that look very similar and only a single or few pixels separate them from each other with common font types and sizes:

il ij fl l! oc EF QO 83 ‘’ ,. ,;

If the viewing distance is too large the characters blur and i, l or ! look practically identical, for example. The importance of this challenge is underlined by the fact that reading characters (standardized optotypes) is the most common method of assessing individual visual acuity (see Figure 10).

For other information domains it could be useful for at least neighbouring pixels to merge, for example when rendering photorealistic images where the perception of an individual pixel could compromise the naturalness of the image. Here, the visualization on the LHRD should create the illusion of a continuous real-world but with the help of discrete pixels, thus a slightly larger viewing distance could be beneficial (e.g., neighbouring pixels merge for viewing distances roughly between 86 cm and 172 cm in the Stallion Cluster). However, if the viewing distance becomes too large, small details also become imperceptible. There is thus no optimal viewing distance for a display, but there is an appropriate range of distances for a specific information content and layout. Since LHRDs are mostly wider and higher than this distance span, users have to move physically in front of a LHRD in order to perceive all displayed information from the upper-left to the lower-right display corner. An exception to this could be a spherical LHRD, since the distance between each pixel and the centre is identical (radius of the sphere) and can be optimized dependent on the desired pixel density and information visualization, but this high degree of specialisation makes the usage of such a display very inflexible. However, a further limitation of the human visual system makes physical navigation – even in a spherical display – inevitable.

Figure 10: Snellen chart[10] with different sizes of standardized optotypes to assess human visual acuity.

2.3.2 Field of view

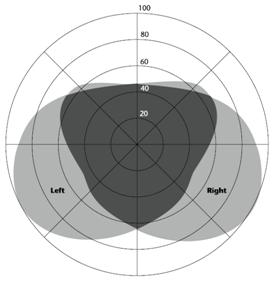

Due to the placement of human eyes, the binocular field of view covers roughly about 180 degrees horizontally (forward-facing) [Ware 2004]. That means when a human with normal vision gazes straight ahead, the light reflected off of or originating from objects placed in a horizontal angle of about ± 90 degrees of the viewing direction impinges one (monocular) or both (binocular vision with depth information) of her retinas. The field of view with binocular vision covers only about 120 degrees horizontally. The vertical field of view is much smaller than the horizontal as is illustrated in Figure 11. Users’ at a glance experience is therefore limited by the visual field of view. This fact limits our ability to analyse large information spaces quickly large information spaces and to compare information objects directly. However, this is further limited by the anatomy of the eye and the distribution of its visual receptors.

The type of visual receptors and their density is unevenly distributed on the retina. The area with the highest resolution and the best colour sensing, which consequently is the area with the best acuity, is very small and roughly at the centre of the retina. This area is named fovea and covers only about two degrees of the field of view [Dowling 1987]. Humans perceive their environment by saccadic movements of the eyes in order to position the fovea in the direction of interesting details followed by a short fixation. Thus, there is already physical movement needed to successively build up a clear mental image of one’s environment or the information visualized on a LHRD. However, the saccadic movements are mostly subconscious, very direct and require minimal physical exertion. More physical navigation is needed if the desired information is on the border or outside of the field of view. Therefore, eye movements are combined with head and body movements, increasing physical load and the degree of consciousness associated with the movements.

Figure 11: The human visual field of view when gazing straight ahead. The fields are constrained by facial features e.g. the nose. The darker-gray overlap shows the region of binocular vision [Ware 2004].

As previously discussed, spherical displays provide the advantage that all pixels are equidistant to the centre and the pixel density can be optimized for human visual acuity. Due to the limited field of view, however, this solution also requires physical navigation (at least head and bodily movement) of the user to perceive all parts of the display. That applies likewise to cylindrical displays such as the 360° ZKM PanoramaScreen illustrated in Figure 12.

Figure 12: 360° PanoramaScreen of the ZKM Karlsruhe[11] displaying the artistic installation Globorama. User move physically inside the immersive display and explore the virtual world with the help of an interactive laser pointer [König et al. 2008].

2.4 Summary

Wall-sized displays with millions of pixels offer various advantages compared to conventional desktop monitors. The increased display real estate of LHRDs provides the possibility of visualizing very complex information spaces at a glance. In addition to virtual zooming and panning, the user is also able to physically explore the “information wall” and to utilize natural navigation strategies well-known from the real-world. Several empirical studies have shown that LHRDs enable better performance times for navigation, search and comparison tasks as well as giving users more of a sense of confidence. However LHRDs also introduce new challenges for the design of appropriate input devices. A fundamental requirement is support for physical mobility while interacting with a LHRD. Conventional input devices such as a mouse and keyboard require a stable surface which impedes free movement and fluid interaction. We will address this challenge in the next chapter, introducing an input device specially designed to enable interaction with LHRDs even from distant positions.

3 Designing Input Devices for LHRDs

![]() Contents

Contents

3.2 Design Space Classification

3.3.3.1 Technical Requirements and Solutions

3.3.3.3 Interactive Laser Pointer @ Design Space Classification

3.3.4.1 Participants and Design

3.3.5 Globorama: Laser Pointer Interaction between Art and Science

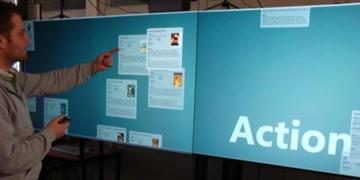

In the previous chapter we discussed the specific characteristics of LHRDs and the benefits of their usage as compared with conventional desktop monitors. However, they also introduce new challenges for human-computer interaction, particularly for the design of suitable input devices. Due to the higher resolution of LHRDs, input devices are required that enable users to efficiently navigate hundreds of millions of pixels. At the same time, the input device should also offer the precision to effectively select or manipulate small objects, even if placed several meters away from the user’s position at the far end of the display (e.g., activating the Windows Start Button at the lower-left corner or the Close Button (x) on the upper-right corner in case of a standard Microsoft Windows desktop). Moreover, not only has the distance between the user and the display increased tremendously compared to a conventional desktop monitor setting, but this distance can also vary dramatically. Since LHRDs match or even exceed the capabilities of the human visual system in terms of spatial resolution and field of view – as discussed in the previous chapter – to a large extent physical navigation is required to perceive all of the pixels and to take full advantage of these displays. Therefore, users move in front of these displays and require input devices that accommodate this necessary mobility. This critical requirement poses several fundamental issues: the device should work wirelessly (in terms of data transmission and power supply), should be easy to transport (lightweight and comfortable handling), should work anywhere in front of the LHRD (without restrictions such as a table as in the case of mouse input), and the interaction speed and accuracy should scale well with larger distances and extreme positions. Moreover, this device should also function in a collaborative setting with multiple users and multiple identical or different devices in parallel.

In order to investigate these requirements more systematically we created a classification scheme for the design space of input devices in general and the corresponding usability measures. This will enable interaction designers to explore diverse design alternatives and to discuss and evaluate them systematically. Based on this groundwork we developed a new input device that is designed specifically to fulfil the particular needs of users interacting with LHRDs: an interactive laser pointer that offers fast and precise interaction from almost any position and distance. We will discuss the hardware and software design of our solution in the following sections and present an empirical study we conducted to assess the usability of the laser pointer method of interaction. Furthermore, we will present an example use case of the laser pointer: the artistic installation Globorama. Here, the laser pointer was used in two exhibitions with thousands of visitors. This offered the opportunity to complement the empirical findings of our experiments with more qualitative insights gained through observations and questionnaires in a real world scenario.

3.1 Design Space & Measures

“Input devices sense physical properties of people, places, or things”, [Hinckley 2008]. They are the controls enabling humans to embody their mental actions in digital operations. A simplified model of the human-computer interaction is illustrated in Figure 13. The communication can be seen as a closed-loop system where the human receives information about the current machine state by visual stimulation provided by a display, the output device. Also other human senses can be incorporated in the information transmission such as hearing, touch, or proprioception. The human perceives and processes the information and responds by using the input devices resulting in a manipulation of the machine state. The terms input and output are defined with respect to the machine [MacKenzie 1995]. Typically, input devices are controlled by human limbs such as hands, arms, and feet. However, input devices can also track eye and head motions, interpret speech commands or even translate specific activation patterns of the human brain into predefined digital operations (e.g., moving an object).

Figure 13: The human-machine interface, a closed-loop model of the human-computer interaction with input and output devices as intermediaries. The input devices are the controls humans manipulate in order to change the machine state [MacKenzie 1995].

Over the last 35 years, several taxonomies for input devices have been presented, each focusing on specific properties and covering the input devices of their time. Foley & Wallace [1974] discovered common data types and features shared by all input devices in the context of interactive computer graphics. They defined five primitive virtual devices that can be used to represent or simulate the full variety of input devices and their data types. The assumption was that “[...] every physical input device can be treated as a physical realization of one, or several, of just five distinct virtual devices: the pick, the button, the keyboard, the locator, and the valuator”, [Wallace 1976]. The main intention was to achieve device independency in order to facilitate program portability, reusability of existing algorithms, and interface standardization.

· Locator: the locator is a virtual device used to indicate a position and/or orientation with respect to the display space. Prototypes of the locator are pointing devices such as the mouse or the joystick.

· Pick: the pick is used to designate user defined objects (e.g., drawn lines, curves, or text) by directly pointing on it. A prototype of the pick is a lightpen. The pick produces a hit event which carries a reference to the selected graphics object (in contrast to the locator that carries the location without referencing objects).

· Button: with the button a predefined program action can be performed including the generation of a specified symbol or a function key. The button can be combined with a pointing device in order to trigger a selection (e.g., mouse button) or multiple buttons can be bundled together simulating a keyboard.

· Keyboard: the keyboard is a virtual device that is used to input text using standardized characters.

· Valuator: the valuator is used to determine a numerical value. A prototype for a one-dimensional valuator is a potentiometer.

Foley & Wallace [1974] defined these virtual devices based on their experiences in the interactive computer graphics domain and focused on improving efficiency by identifying common features between existing input devices. When designing new input devices, however, it can be more inspiring to observe device differences and specific characteristics. Buxton [1983] introduced a taxonomy for continuous input devices that illustrates the diversity of the devices and the design space in general based on a tabular categorization (see Figure 14). He identified several independent design dimensions that form the design space: the dimensionality of the input (1D, 2D, 3D), the property that is being sensed (position, motion, pressure), the agent of control (hand, foot, voice), and the interaction directness (touch or mechanical intermediary).

Figure 14: Tableau of Continuous Input Devices (adapted from [Buxton 1983]). The input devices are categorized by the first order dimensions property sensed (rows) and number of dimensions sensed (columns). “Subrows distinguish between devices that have a mechanical intermediary (such as a stylus) between the hand and the sensing mechanism (indicated by M), and those which are touch sensitive (indicated by T). Subcolumns distinguish devices that use comparable motor control for their operation” [Buxton 1983]. An updated online version is available at http://www.billbuxton.com/lexical.html.

Card et al. [1990] extended the taxonomy of Buxton by also integrating discrete input devices such as a keyboard. Similar to Buxton’s Tableau of Continuous Input Devices, the taxonomy of Card et al. is also represented in a tabular matrix, but it provides more details concerning the dimensions and the classified input devices (see Figure 15). The dimensionality is split explicitly into linear and rotary dimensions, and each sensing feature of an input device is represented on its own (e.g., a three-button mouse senses linear movements in the x- and y-dimension and tracks the position of three physical buttons). This taxonomy is more expressive; however it covers relatively few design dimensions and does not cover the entire design space of input devices (e.g., hardware design or mobility). Therefore, both taxonomies provide a good framework to categorize, discuss and illustrate the diversity of input devices, but focus only on some major dimensions and high-level properties. When designing new input devices, a complete description of the fundamental dimensions spanning the design space would be helpful, enabling a more systematic approach to design and engineering.

Figure 15: Input device taxonomy of Card et al. [1991] representing a three-button mouse and a radio control with station chooser (slider), selection-knob (OFF, AM, FM) and volume control. “Circles are used to indicate that a device senses one of the physical properties shown on the vertical axis along one of the linear or rotary dimensions shown on the horizontal axis. For example, the circle representing the radio volume control indicates a device that senses an angle about the Z axis. The position in a column indicates the number of values that are sensed (i.e., the measure of the domain set). For example, the circle representing the selection control represents a discrete device. Lines are used to connect the circles of composite devices. A black line represents a merge composition (such as the X and Y components of the mouse). The dashed line represents a layout composition (such as the three buttons on a mouse, represented by a circle with a 3 in it to indicate identical devices)”, [Card et al. 1991].

3.2 Design Space Classification

In order to achieve a more thorough description of the design space we conducted a literature review concerning input device taxonomies, classifications, and evaluation methods. We extracted features that describe the functionality and quality of an input device with respect to human-computer interaction. We categorized these features along the high-level classification of MacKenzie [1995] who distinguishes between device properties and parameters, as follows:

“Properties are the qualities which distinguish among devices and determine how a device is used and what it can do“, [MacKenzie 1995]. The features presented by Buxton and Card et al., such as dimensionality and sensed property can be classified as device properties, for example. These properties are specified by the interaction designer and fixed by the utilized sensing technology and hardware design. A mouse, for example, senses movements in two dimensions. This property is based on a design decision and cannot be adjusted or optimized without changing the general functionality of the input device.

“A parameter is any characteristic of a device or its interface which can be tuned or measured along a continuum of values“, [MacKenzie 1995]. Parameters are features of the specific device implementation, such as the weight of the hardware device or the resolution of the sensor. Higher sensor resolution can be beneficial for interaction speed and accuracy, but would not change the overarching interaction concept – in contrast to the device properties.

We therefore project our analysis of the design space onto these two major categories that help to describe the functionality of an input device. In order to assess the quality of an input device, diverse measures can be used, each of which focuses on specific aspects. We complement our categorization of the design space by illustrating an example set of usability measures that are suitable for assessing the quality of input devices with respect to human-computer interaction. This assortment of measures derives from our literature review and is categorized with respect to the [ISO-9241-11: 1998] that defines effectiveness, efficiency and satisfaction as the main usability measures. An overview of our classification is illustrated in Figure 16 and the individual items are discussed below.

Figure 16: Classification of the design space of input devices and corresponding usability measures.

·

Input

Device properties

o

Physical property sensed

Category: Input device properties

Reference: [Buxton 1983], [Hinckley 2008], [Jacob 1996]

Input devices sense physical aspects or properties

of the user, the user’s environment, or the hardware input device itself. The

most common physical properties being sensed are position, motion,

and pressure. For example, touch screens track the absolute position of

a finger, a mouse measures relative movements (the change in position), and

isometric joysticks measure the pressure imposed by users upon the stationary

stick (e.g., IBM pointing stick). However, other physical properties can be

utilized for human-computer interaction as well, such as room or body

temperature, light intensity, sound level, electric current, or capacity.

o

Type of motion

Category: Input device properties

Reference: [Card et al. 1991], [Hinckley 2008], [Jacob 1996]

When sensing position, motion, or pressure, the

direction of the force imposed by the user can be differentiated. For example,

physical sliders or buttons offer a linear movement in contrast to

rotary knobs that offer angular modifications. In case of gestures

(e.g., finger, stylus or mouse gestures), combinations of linear and angular

motions are often used in order to trigger predefined commands, to sketch

geometric shapes, or to write characters.

o

Continuous vs. discrete input

Category: Input device properties

Reference: [Buxton 1983], [Jacob 1996]

Currently, there a two larger groups of input devices that are mostly described

as pointing devices (e.g., mouse) and text input devices (e.g., keyboard). A

more formal differentiation is based on the type of their input set: continuous

versus discrete input. Continuous input devices such as pointing devices sense

physical properties of an analogue, continuous world with an infinite set of

input values (infinite domain set) – although, from a technological point

of view, the input values are discretized by the digital sensing technology.

Keyboards, buttons in general, and also some rotary knobs provide discrete

input, since they are designed to handle only a finite number of input

values. They provide a limited number of states – mostly only two:

key/button pressed and key/button released.

o

Degree of freedom

Category: Input device properties

Reference: [Buxton 1983], [Hinckley 2008], [Jacob 1996]

The degree of freedom (DOF) specifies the total number of dimensions an input

device senses. A button senses linear movement in one dimension, a mouse

measures relative movements in two dimensions, and – with the help of an

optical tracking system – the position of objects equipped with

retro-reflective markers such as a flystick can be tracked in a

three-dimensional space. Moreover, if the orientation of the flystick can also

be identified, the flystick provides a total of six degrees of freedom. Most

pointing devices are augmented with buttons to trigger selections or special

short-cuts. The degree of freedom of such a composite device increases with the

number of buttons (e.g., 6 DOF Flystick + 2 Buttons = 8 DOF). The three spatial

dimensions belonging to the tracking in space are closely related, since users

can change the values in all three dimensions by performing just one action:

moving the flystick along the space diagonal, for example. These related

dimensions are called integral dimensions. The dimensions of the buttons

in the flystick example have to be modified independently and thus are denoted

as separable dimensions [Jacob 1996].

o

Agent of control / input modality

Category: Input device properties

Reference: [Buxton 1983]

In order to communicate with computers, humans may utilize diverse interaction

modalities. They can voice verbal commands, move physical input devices by

hand, point with finger gestures to control the screen cursor, select objects

by looking at them, change values with foot pedals or in the future may navigate just by thinking – just to mention

some examples. Although the most common input devices, mouse and keyboard, are

controlled manually, humans’ capabilities of communicating are very rich. Multimodal

interfaces support two or more user input modes that can be used either individually

or simultaneously [Oviatt 2008]. Most multimodal interfaces are implemented by combining the

interaction data of multiple unimodal input devices such as voice commands and

finger positions (e.g., Put-that-there, [Bolt 1980]). However, multiple input modes can also be integrated within one

hardware device, allowing for richer interaction. A general design